Deep Learning has received a lot of attention over the past few years and has been employed successfully by companies like Google, Microsoft, IBM, Facebook, Twitter etc. to solve a wide range of problems in Computer Vision and Natural Language Processing. In this course we will learn about the building blocks used in these Deep Learning based solutions. Specifically, we will learn about feedforward neural networks, convolutional neural networks, recurrent neural networks and attention mechanisms. We will also look at various optimization algorithms such as Gradient Descent, Nesterov Accelerated Gradient Descent, Adam, AdaGrad and RMSProp which are used for training such deep neural networks. At the end of this course students would have knowledge of deep architectures used for solving various Vision and NLP tasks

Nptel Deep Learning – IIT Ropar Week 1 Assignment Answer

Course layout

Week 2 : Multilayer Perceptrons (MLPs), Representation Power of MLPs, Sigmoid Neurons, Gradient Descent, Feedforward Neural Networks, Representation Power of Feedforward Neural Networks

Week 3 : FeedForward Neural Networks, Backpropagation

Week 4 : Gradient Descent (GD), Momentum Based GD, Nesterov Accelerated GD, Stochastic GD, AdaGrad, RMSProp, Adam, Eigenvalues and eigenvectors, Eigenvalue Decomposition, Basis

Week 5 : Principal Component Analysis and its interpretations, Singular Value Decomposition

Week 6 : Autoencoders and relation to PCA, Regularization in autoencoders, Denoising autoencoders, Sparse autoencoders, Contractive autoencoders

Week 7 : Regularization: Bias Variance Tradeoff, L2 regularization, Early stopping, Dataset augmentation, Parameter sharing and tying, Injecting noise at input, Ensemble methods, Dropout

Week 8 : Greedy Layerwise Pre-training, Better activation functions, Better weight initialization methods, Batch Normalization

Week 9 : Learning Vectorial Representations Of Words

Week 10: Convolutional Neural Networks, LeNet, AlexNet, ZF-Net, VGGNet, GoogLeNet, ResNet, Visualizing Convolutional Neural Networks, Guided Backpropagation, Deep Dream, Deep Art, Fooling Convolutional Neural Networks

Week 11: Recurrent Neural Networks, Backpropagation through time (BPTT), Vanishing and Exploding Gradients, Truncated BPTT, GRU, LSTMs

Week 12: Encoder Decoder Models, Attention Mechanism, Attention over images

Nptel Deep Learning – IIT Ropar Week 1 Assignment Answer

Week 1 : Assignment 1

Common data for questions 1,2 and 3

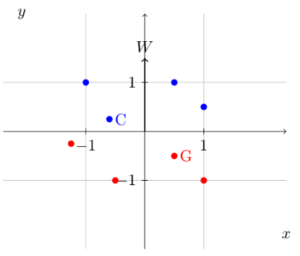

In the figure shown below, the blue points belong to class 1 (positive class) and the red points belong to class 0 (negative class). Suppose that we use a perceptron model, with the weight vector w as shown in the figure, to separate these data points. We define the point belongs to class 1 if wTx≥0

wTx≥0 else it belongs to class 0.

The points G

G and C

C will be classified as?

Note: the notation (G,0)

(G,0) denotes the point G

G will be classified as class-0 and (C,1)

(C,1) denotes the point C

C will be classified as class-1

(C,0),(G,0)

(C,0),(G,0)

(C,0),(G,1)

(C,0),(G,1)

(C,1),(G,1)

(C,1),(G,1)

(C,1),(G,0)

(C,1),(G,0)

Suppose that we multiply the weight vector w by −1. Then the same points G

G and C

C will be classified as?

(C,0),(G,0)

(C,0),(G,0)

(C,0),(G,1)

(C,0),(G,1)

(C,1),(G,1)

(C,1),(G,1)

(C,1),(G,0)

(C,1),(G,0)

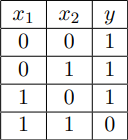

Consider the following table, where x1

x1 and x2

x2 are features and y

y is a label.

Assume that the elements in w are initialized to zero and the perception learning algorithm is used to update the weights w. If the learning algorithm runs for long enough iterations, then

We know from the lecture that the decision boundary learned by the perceptron is a line in R2

R2. We also observed that it divides the entire space of R2

R2 into two regions, suppose that the input vector x∈R4

x∈R4, then the perceptron decision boundary will divide the whole R4

R4 space into how many regions?

Choose the correct input-output pair for the given MP Neuron.

f(x)={1,0,ifx1+x2+x3<2otherwise

f(x)={1,ifx1+x2+x3<20,otherwise

y=1

y=1 for (x1,x2,x3)

(x1,x2,x3) = (0, 0, 0)

y=0

y=0 for (x1,x2,x3)

(x1,x2,x3) = (0, 0, 1)

y=1

y=1 for (x1,x2,x3)

(x1,x2,x3) = (1, 0, 0)

y=1

y=1 for (x1,x2,x3)

(x1,x2,x3) = (1, 1, 1)

y=0

y=0 for (x1,x2,x3)

(x1,x2,x3) = (1, 0, 1)

Consider the following table, where x1

x1 and x2

x2 are features (packed into a single vector x=[x1x2]

x=[x1x2]) and y

y is a label:

Suppose that the perceptron model is used to classify the data points. Suppose further that the weights w are initialized to w = [11]

[11]. The following rule is used for classification,

y={10ifwTx>0ifwTx≤0

y={1ifwTx>00ifwTx≤0

The perceptron learning algorithm is used to update the weight vector w. Then, how many times the weight vector w will get updated during the entire training process?

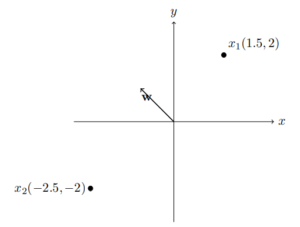

Consider points shown in the picture. The vector w = [−11]

[−11].As per this weight vector, the Perceptron algorithm will predict which classes for the data points x1

x1 and x2

x2.

NOTE: y={10ifwTx>0ifwTx≤0

y={1ifwTx>00ifwTx≤0

x1

x1 = −1

x1

x1 = 1

x2

x2 = −1

x2

x2 = 1